Using Flow

Core Concepts

Flow Type System

Inspect Flow mirrors Inspect AI’s object model with corresponding Flow types:

- FlowJob — The top-level job definition. Under the hood it translates to an Inspect AI Eval Set. Contains a list of tasks and job settings.

- FlowTask — Configuration for a single evaluation task. Maps to Inspect AI Task parameters.

- FlowModel — Model configuration including API settings and generation settings. Maps to Inspect AI Model.

- FlowGenerateConfig — Model generation parameters. Maps to Inspect AI GenerateConfig.

- FlowSolver and FlowAgent — Solver and agent chain configuration. Map to Inspect AI Solver and Agent.

- FlowOptions — Runtime execution options. Maps to Inspect AI eval_set() parameters.

- FlowDefaults — System for setting default values across tasks, models, solvers, and agents.

Flow Config Structure

FlowJob is the primary interface for defining Flow workflows. All Flow operations—including parameter sweeps and matrix expansions—ultimately produce a list of tasks that FlowJob executes.

Required fields:

log_dir— Output path for logging results. Must be set before running the flow job. Supports S3 paths (e.g.,s3://bucket/path)

Optional fields:

| Field | Description | Default |

|---|---|---|

tasks |

List of FlowTask objects defining the evaluations to run | None |

includes |

List of other flow configs to include (paths as strings or FlowInclude objects) |

None |

log_dir_create_unique |

If True, append numeric suffix to log_dir if it exists. If False, reuse existing directory (must be empty or have log_dir_allow_dirty=True) |

False |

python_version |

Python version for the isolated virtual environment (e.g., "3.11") |

Same as current environment |

dependencies |

PyPI packages, Git URLs, or local paths to install | None |

env |

Environment variables to set when running tasks | None |

options |

Runtime options (see FlowOptions reference) | None |

defaults |

Default values applied across tasks, models, and solvers | None |

flow_metadata |

Metadata stored in the flow config (not passed to Inspect AI) | None |

Creating Flow Configs

Your First Config

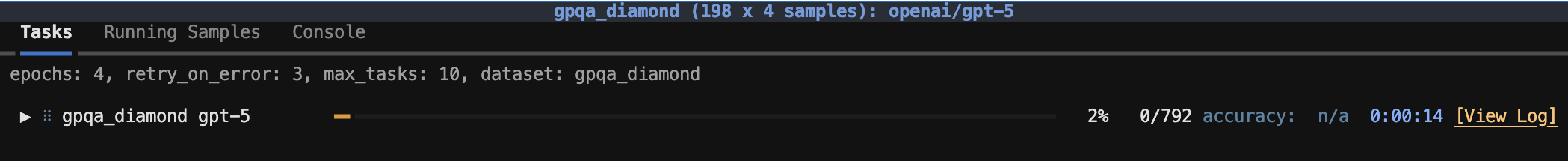

In Basic Examples, we showed a simple FlowJob. Let’s break down what’s happening:

first_config.py

from inspect_flow import FlowJob, FlowTask

FlowJob(

log_dir="logs",

dependencies=["inspect-evals"],

tasks=[

FlowTask(

name="inspect_evals/gpqa_diamond",

model="openai/gpt-5",

),

],

)- 1

- Specify the directory for storing logs

- 2

-

Install

inspect-evalsPython package - 3

- List evaluation tasks to run

- 4

- Specify task from registry by name

- 5

- Specify model to evaluate by name

To run the task, run the following command in your shell.

flow run config.py

What happens when you run this?

- Flow creates an isolated virtual environment

- Installs

inspect-evalsandopenai(inferred from model) - Loads the

gpqa_diamondtask from theinspect_evalsregistry - Runs the evaluation with GPT-5

- Stores results in

logs/

Python config files are evaluated as normal Python code. The last expression in the file is used as the FlowJob. This means you can:

- Define variables and reuse them

- Use loops or comprehensions to generate task lists

- Import helper functions

- Add comments and documentation

Flow configs are just Python!

Task Specification

In the example above, we used a registry name ("inspect_evals/gpqa_diamond"). Flow supports multiple ways to reference tasks:

# Tasks from installed packages.

FlowTask(

name="inspect_evals/gpqa_diamond",

model="openai/gpt-5"

)# Auto-discovers `@task` decorated functions in the specified file and creates a task for each of them.

FlowTask(

name="./my_task.py",

model="openai/gpt-5"

)# Explicitly selects a specific function from the file.

FlowTask(

name="./my_task.py@custom_eval",

model="openai/gpt-5"

)Task Configuration

FlowTask accepts parameters that map to Inspect AI Task fields. The examples below show commonly used fields; see the FlowTask reference documentationfor the complete list of available parameters.

FlowTask(

name="inspect_evals/mmlu_0_shot",

model="openai/gpt-5",

epochs=3,

config=FlowGenerateConfig(

temperature=0.7,

max_tokens=1000,

),

solver="chain_of_thought",

args={"subject": "physics"},

sandbox="docker",

sample_id=[0, 1, 2],

)- 1

-

Task name — Maps to Inspect AI

Task.name. Can be a registry name ("inspect_evals/mmlu"), file path ("./task.py"), or file with function ("./task.py@eval_fn"). - 2

-

Model — Maps to Inspect AI

Task.model. Optional model for this task. If not specified, uses the model fromINSPECT_EVAL_MODELenvironment variable. Can be a string ("openai/gpt-5") or a FlowModel object for advanced configuration. - 3

-

Epochs — Maps to Inspect AI

Task.epochs. Number of times to repeat evaluation over the dataset samples. Can be an integer (epochs=3) or a FlowEpochs object to specify custom reducer functions (FlowEpochs(epochs=3, reducer="median")). By default, scores are combined using the"mean"reducer across epochs. - 4

-

Generation config — Maps to Inspect AI

Task.config(GenerateConfig). Model generation parameters liketemperature,max_tokens,top_p,reasoning_effort, etc. These settings override config onFlowJob.configbut are overridden by settings onFlowModel.config. - 5

-

Solver chain — Maps to Inspect AI

Task.solver. The algorithm(s) for solving the task. Can be a string ("chain_of_thought"), FlowSolver object, FlowAgent object, or a list of solvers for chaining. Defaults togenerate()if not specified. - 6

-

Task arguments — Maps to task function parameters. Dictionary of arguments passed to the task constructor or

@taskdecorated function. Enables parameterization of tasks (e.g., selecting dataset subsets, configuring difficulty levels). - 7

-

Sandbox environment — Maps to Inspect AI

Task.sandbox. Can be a string ("docker","local"), a tuple with additional config, or aSandboxEnvironmentTypeobject. - 8

-

Sample selection — Evaluate specific samples from the dataset. Accepts a single ID (

sample_id=0), list of IDs (sample_id=[0, 1, 2]), or list of string IDs.

Model Specification

Models can be specified as simple strings or as FlowModel objects for more control:

FlowTask(name="task", model="openai/gpt-5")FlowTask(

name="task",

model=FlowModel(

name="openai/gpt-5",

config=FlowGenerateConfig(

reasoning_effort="medium",

max_connections=10,

),

base_url="https://custom-endpoint.com",

api_key="${CUSTOM_API_KEY}",

)

)# For agent evaluations with multiple roles

FlowTask(

name="multi_agent_task",

model_roles={

"assistant": "openai/gpt-5",

"critic": "anthropic/claude-3-5-sonnet",

}

)Use FlowModel when you need to:

- Set model-specific generation configs

- Use custom API endpoints

- Configure API keys per model

- Organize complex multi-model setups

Dependency Management

Inspect Flow automatically creates isolated virtual environments for each workflow run, ensuring repeatability and avoiding dependency conflicts with your system Python environment.

How Virtual Environments Work

When you run flow run config.py, Flow:

- Creates a temporary virtual environment with

uv - Installs your specified dependencies plus auto-detected model provider packages

- Executes your evaluations in this isolated environment

- Cleans up the temporary environment after completion (logs persist in

log_dir)

Specifying Dependencies

The dependencies field in FlowJob accepts multiple types of package specifiers:

# Standard PyPI package names with optional version specifiers.

FlowJob(

dependencies=[

"inspect-evals",

"pandas==2.0.0",

],

tasks=[...]

)# Install directly from Git repositories. Use `@commit_hash` to pin to specific versions for repeatability.

FlowJob(

dependencies=[

"git+https://github.com/UKGovernmentBEIS/inspect_evals@ef181cd",

],

tasks=[...]

)# Install local packages using relative or absolute paths.

FlowJob(

dependencies=[

"./my_custom_eval",

"../shared/utils",

],

tasks=[...]

)Python Version Control

Specify the Python version for your job’s virtual environment:

FlowJob(

python_version="3.11",

dependencies=["inspect-evals"],

tasks=[...]

)To verify which Python version will be used, run:

flow config config.py --resolveThis shows the resolved configuration including the Python version that will be used.

For repeatable workflows:

- Pin PyPI package versions:

"inspect-evals==0.3.15" - Pin Git commits:

"git+https://github.com/user/repo@commit_hash" - Specify

python_versionexplicitly

Defaults and Overrides

Inspect Flow provides a powerful defaults system to avoid repetition when configuring evaluations. The FlowDefaults field lets you set default values that cascade across tasks, models, solvers, and agents—with more specific settings overriding less specific ones.

The FlowDefaults System

FlowDefaults supports multiple levels of default configuration:

from inspect_flow import (

FlowJob,

FlowDefaults,

FlowGenerateConfig,

FlowModel,

FlowSolver,

FlowAgent,

FlowTask,

)

FlowJob(

defaults=FlowDefaults(

config=FlowGenerateConfig(

max_connections=10,

),

model=FlowModel(

model_args={"arg": "foo"},

),

model_prefix={

"openai/": FlowModel(

config=FlowGenerateConfig(

max_connections=20

),

),

},

solver=FlowSolver(...),

solver_prefix={"chain_of_thought": ...},

agent=FlowAgent(...),

agent_prefix={"inspect/": ...},

task=FlowTask(...),

task_prefix={"inspect_evals/": ...},

),

tasks=[...]

)- 1

- Default model generation options. Will be overridden by settings on FlowTask and FlowModel.

- 2

- Field defaults for models.

- 3

-

Model defaults for model name prefixes. Overrides

FlowDefaults.configandFlowDefaults.model. If multiple prefixes match, longest prefix wins. - 4

- Field defaults for solvers.

- 5

-

Solver defaults for solver name prefixes. Overrides

FlowDefaults.solver. If multiple prefixes match, longest prefix wins. - 6

- Field defaults for agents.

- 7

-

Agent defaults for agent name prefixes. Overrides

FlowDefaults.agent. If multiple prefixes match, longest prefix wins. - 8

- Field defaults for tasks.

- 9

-

Task defaults for task name prefixes. Overrides

FlowDefaults.configandFlowDefaults.task. If multiple prefixes match, longest prefix wins.

Merge Priority and Behavior

Defaults follow a hierarchy where more specific settings override less specific ones:

For Models:

- Global config defaults (

defaults.config) - Global model defaults (

defaults.model) - Model prefix defaults (

defaults.model_prefix) - Task-specific config (

task.config) - Model-specific config (

model.config) — highest priority

Example hierarchy in action:

hierarchy.py

from inspect_flow import FlowDefaults, FlowGenerateConfig, FlowJob, FlowModel, FlowTask

FlowJob(

defaults=FlowDefaults(

config=FlowGenerateConfig(

temperature=0.0,

max_tokens=100,

),

model_prefix={

"openai/": FlowModel(

config=FlowGenerateConfig(temperature=0.5)

)

},

),

tasks=[

FlowTask(

name="task",

config=FlowGenerateConfig(temperature=0.7),

model=FlowModel(

name="openai/gpt-4o",

config=FlowGenerateConfig(temperature=1.0),

),

)

],

)- 1

-

Global defaults:

temperature=0.0, max_tokens=100 - 2

-

Prefix defaults override:

temperature=0.5(for OpenAI models) - 3

-

Task config overrides:

temperature=0.7 - 4

-

Model config wins:

temperature=1.0, max_tokens=100

Final result: temperature=1.0 (most specific), max_tokens=100 (from global defaults)

Setting a field to None means “not specified” — it won’t override existing values from defaults. This allows partial configs to merge cleanly:

FlowTask(

config=FlowGenerateConfig(temperature=0.5, max_tokens=None)

)The max_tokens=None doesn’t override a default max_tokens value; it’s simply not set at this level.

CLI Overrides

Override config values at runtime using the --set flag:

flow run config.py --set log_dir=./logsflow run config.py --set options.limit=10

flow run config.py --set defaults.solver.args.tool_calls=noneflow run config.py --set 'options.metadata={"experiment": "baseline", "version": "v1"}'flow run config.py \

--set log_dir=./logs/experiment1 \

--set options.limit=100 \

--set defaults.config.temperature=0.5- Strings: Replace existing values

- Dicts: Replace existing values

- Lists:

- String values append to existing list

- Lists replace existing list

Examples:

# Appends to list

--set dependencies=new_package

# Replaces list

--set 'dependencies=["pkg1", "pkg2"]'Environment Variables

Set config values via environment variables:

export INSPECT_FLOW_LOG_DIR=./logs/custom

export INSPECT_FLOW_LIMIT=50

export INSPECT_FLOW_SET="options.metadata={\"key\": \"value\"}"

export INSPECT_FLOW_VAR="task_min_priority=2"

flow run config.pySupported environment variables:

| Variable | Equivalent Flag | Description |

|---|---|---|

INSPECT_FLOW_LOG_DIR |

--log-dir |

Override log directory |

INSPECT_FLOW_LOG_DIR_CREATE_UNIQUE |

--log-dir-create-unique |

Create new log directory with numeric suffix if exists |

INSPECT_FLOW_LIMIT |

--limit |

Limit number of samples |

INSPECT_FLOW_SET |

--set |

Set config overrides (can be specified multiple times) |

INSPECT_FLOW_VAR |

--var |

Set variables for __flow_vars__ (can be multiple) |

Setting defaults via the command line will override the defaults which in turn might be overridden by anything set explicitly.

Dedicated environment variables (see Supported environment variables above) and corresponding CLI flags will override the --set flag.

Dedicated CLI flags have the highest priority.

Priority order of log-dir, log-dir-create-unique and limit:

- FlowJob defaults

- Explicit setting on the FlowJob

INSPECT_FLOW_SET_environment variables- CLI

--setflags INSPECT_FLOW_LOG_DIR,INSPECT_FLOW_LOG_DIR_CREATE_UNIQUEandINSPECT_FLOW_LIMITenvironment variables- Explicit

--log-dir,--log-dir-create-uniqueand--limitCLI flags

All other settings follow the priority order:

- FlowJob defaults

- Explicit setting on the FlowJob

INSPECT_FLOW_SET_environment variables- CLI

--setflags

Debugging Defaults Resolution

To see the fully resolved configuration with all defaults applied:

flow config config.py --resolveThis shows exactly what settings each task will use after applying all defaults and overrides.

Configuration Inheritance

Inspect Flow supports configuration inheritance through two mechanisms:

Explicit Includes

Use the includes field to explicitly merge other config files into your job:

FlowJob(

includes=["defaults_flow.py"],

log_dir="./logs/flow_test",

tasks=["my_task"]

)Included configs are merged recursively, with the current config’s values taking precedence over included values. For dictionaries, fields are merged deeply. For lists, items are concatenated with duplicates removed.

Automatic Discovery

Inspect Flow automatically discovers and includes files named _flow.py in parent directories. Starting from your config file’s location, it searches upward through the directory tree for _flow.py files and automatically merges them as base configurations.

This allows you to define shared defaults (model settings, dependencies, etc.) at a repository root that apply to all configs in subdirectories without explicit includes.

Parameter Sweeping

Parameter sweeping lets you systematically explore evaluation configurations by generating Cartesian products of parameters. Instead of manually writing every combination, Flow provides matrix and “with” functions to declaratively generate evaluation grids.

Matrix Functions (Cartesian Products)

Matrix functions generate all combinations of their parameters using Cartesian products.

tasks_matrix()

Generate task configurations by combining tasks with models, configs, solvers, and arguments:

tasks_matrix.py

from inspect_flow import FlowJob, tasks_matrix

FlowJob(

log_dir="logs",

dependencies=["inspect-evals"],

tasks=tasks_matrix(

task=["inspect_evals/gpqa_diamond", "inspect_evals/mmlu_0_shot"],

model=["openai/gpt-4o", "anthropic/claude-3-5-sonnet"],

),

)This creates 4 tasks (2 tasks × 2 models).

models_matrix()

Generate model configurations with different generation settings:

models_matrix.py

from inspect_flow import FlowGenerateConfig, FlowJob, models_matrix, tasks_matrix

FlowJob(

log_dir="logs",

dependencies=["inspect-evals"],

tasks=tasks_matrix(

task=[

"inspect_evals/gpqa_diamond",

"inspect_evals/mmlu_0_shot",

],

model=models_matrix(

model=[

"openai/gpt-5",

"openai/gpt-5-mini",

],

config=[

FlowGenerateConfig(reasoning_effort="minimal"),

FlowGenerateConfig(reasoning_effort="low"),

FlowGenerateConfig(reasoning_effort="medium"),

FlowGenerateConfig(reasoning_effort="high"),

],

),

),

)This creates 16 tasks (2 task × 2 models × 4 resoning_effort).

configs_matrix()

Generate generation config combinations by specifying individual parameters:

configs_matrix.py

from inspect_flow import FlowJob, configs_matrix, models_matrix, tasks_matrix

FlowJob(

log_dir="logs",

dependencies=["inspect-evals"],

tasks=tasks_matrix(

task=[

"inspect_evals/gpqa_diamond",

"inspect_evals/mmlu_0_shot",

],

model=models_matrix(

model=[

"openai/gpt-5",

"openai/gpt-5-mini",

],

config=configs_matrix(

reasoning_effort=["minimal", "low", "medium", "high"],

),

),

),

)This creates 16 tasks (2 task × 2 models × 4 resoning_effort).

solvers_matrix() and agents_matrix()

Generate solver or agent configurations with different arguments:

solvers_matrix.py

from inspect_flow import FlowJob, solvers_matrix, tasks_matrix

FlowJob(

log_dir="logs",

tasks=tasks_matrix(

task="my_task",

solver=solvers_matrix(

solver="chain_of_thought",

args=[

{"max_iterations": 3},

{"max_iterations": 5},

{"max_iterations": 10},

],

),

),

)This creates 3 tasks (1 task × 3 solver configurations).

With Functions (Apply to All)

“With” functions apply the same value to all items in a list. Use these when you want to sweep over some parameters while keeping others constant.

tasks_with()

Apply common settings to multiple tasks:

tasks_with.py

from inspect_flow import FlowGenerateConfig, FlowJob, tasks_with

FlowJob(

tasks=tasks_with(

task=["inspect_evals/gpqa_diamond", "inspect_evals/mmlu_0_shot"],

model="openai/gpt-4o", # Same model for both tasks

config=FlowGenerateConfig(temperature=0.7), # Same config for both

)

)This creates 2 tasks (2 tasks, each with the same model and config).

Combining Matrix and With

Mix parameter sweeps with common settings:

matrix_and_with.py

from inspect_flow import (

FlowJob,

configs_matrix,

tasks_matrix,

tasks_with,

)

FlowJob(

log_dir="logs",

tasks=tasks_with(

task=tasks_matrix(

task=["task1", "task2"], config=configs_matrix(temperature=[0.0, 0.5, 1.0])

), # Creates 6 tasks (2 × 3)

model="openai/gpt-4o", # Applied to all 6 tasks

sandbox="docker", # Applied to all 6 tasks

),

)Nested Sweeps

Matrix functions can be nested to create complex parameter grids. Use the unpacking operator * to expand inner matrix results:

Example: Tasks with nested model sweep

nested_model_sweep.py

from inspect_flow import FlowGenerateConfig, FlowJob, models_matrix, tasks_matrix

FlowJob(

log_dir="logs",

tasks=tasks_matrix(

task=["inspect_evals/mmlu_0_shot", "inspect_evals/gpqa_diamond"],

model=[

"anthropic/claude-3-5-sonnet", # Single model

*models_matrix( # Unpacks list of 4 model configs

model=["openai/gpt-4o", "openai/gpt-4o-mini"],

config=[

FlowGenerateConfig(reasoning_effort="low"),

FlowGenerateConfig(reasoning_effort="high"),

],

),

], # Total: 1 + 4 = 5 models

),

)This creates 10 tasks (2 tasks × 5 model configurations).

Example: Tasks with nested task sweep

nested_task_sweep.py

from inspect_flow import FlowJob, FlowTask, tasks_matrix

FlowJob(

log_dir="logs",

tasks=tasks_matrix(

task=[

FlowTask(name="task1", args={"subset": "test"}), # Single task

*tasks_matrix( # Unpacks list of 3 tasks

task="task2",

args=[

{"language": "en"},

{"language": "de"},

{"language": "fr"},

],

),

], # Total: 1 + 3 = 4 tasks

model=["model1", "model2"],

),

)This creates 8 tasks (4 task variants × 2 models).

Parameter sweeps grow multiplicatively. A sweep with: - 3 tasks - 4 models - 5 temperature values - 3 solver configurations

Results in 3 × 4 × 5 × 3 = 180 evaluations.

Always use --dry-run to check the number of evaluations before running expensive grids.

Config Merging Behavior

When base objects already have values, matrix parameters are merged:

# Config fields merge recursively

tasks_matrix(

task=FlowTask(

name="task",

config=FlowGenerateConfig(temperature=0.5) # Base value

),

config=[

FlowGenerateConfig(max_tokens=1000), # Adds max_tokens, keeps temperature=0.5

FlowGenerateConfig(max_tokens=2000), # Adds max_tokens, keeps temperature=0.5

]

)Running Evaluations

Once you’ve defined your Flow configuration, you can execute evaluations using the flow run command. Flow also provides tools for previewing configurations and controlling runtime behavior.

The flow run Command

Execute your evaluation workflow:

flow run config.pyWhat happens when you run this:

- Flow loads your configuration file

- Creates an isolated virtual environment

- Installs dependencies

- Resolves all defaults and matrix expansions

- Executes evaluations via Inspect AI’s

eval_set() - Stores logs in

log_dir - Cleans up the temporary environment

Common CLI Flags

Preview without running:

flow run config.py --dry-runShows how many tasks would be executed without actually running them. Useful for validating large parameter sweeps.

eval_set would be called with 24 tasksOverride log directory:

flow run config.py --log-dir ./experiments/baselineChanges where logs and results are stored.

Runtime overrides:

flow run config.py \

--set options.limit=100 \

--set defaults.config.temperature=0.5Override any configuration value at runtime. See CLI Overrides for more details.

The flow config Command

Preview your configuration before running:

Basic usage:

flow config config.pyDisplays the parsed configuration as YAML with CLI overrides applied. Does not create a virtual environment or instantiate Python objects.

Full resolution:

flow config config.py --resolveShows the completely resolved configuration:

- Creates virtual environment

- Applies all defaults

- Expands all matrix functions

- Instantiates all Python objects

This is invaluable for debugging what settings will actually be used in your evaluations.

flow config- Quick syntax check, verify overridesflow config --resolve- Debug defaults resolution, inspect final settingsflow run --dry-run- Count tasks in parameter sweeps, validate before expensive runsflow run- Execute evaluations

Results and Logs

Flow Directory Structure

Evaluation results are stored in the log_dir:

logs/

├── 2025-11-21T17-38-20+01-00_gpqa-diamond_KvJBGowidXSCLRhkKQbHYA.eval

├── 2025-11-21T17-38-20+01-00_mmlu-0-shot_Vnu2A3M2wPet5yobLiCQmZ.eval

├── .eval-set-id

├── eval-set.json

├── flow.yaml

└── ...Directory structure:

- Flow passes the

log_dirdirectly to Inspect AIeval_set()for evaluation log storage - Inspect AI handles the actual evaluation log file naming and storage

- Log file naming conventions follow Inspect AI’s standards (see Inspect AI logging docs)

- Flow automatically saves the resolved configuration as

flow.yamlin the log directory - The

.eval-set-idfile contains the eval set identifier - The

eval-set.jsonfile contains eval set metadata

Log formats:

.eval- Binary Inspect AI log format (default, high-performance).json- JSON format (iflog_format="json"in FlowOptions)

Viewing Results

Using Inspect View:

inspect viewOpens the Inspect AI viewer to explore evaluation logs interactively.

S3 Support

Store logs directly to S3:

FlowJob(

log_dir="s3://my-bucket/experiments/baseline",

tasks=[...]

)Advanced Features

Metadata Management

Flow supports two types of metadata with distinct purposes: metadata and flow_metadata.

metadata (Inspect AI Metadata)

The metadata field in FlowOptions and FlowTask is passed directly to Inspect AI and stored in evaluation logs. Use this for tracking experiment information that should be accessible in Inspect AI’s log viewer and analysis tools.

Example:

metadata.py

from inspect_flow import FlowJob, FlowOptions, FlowTask

FlowJob(

log_dir="logs",

options=FlowOptions(

metadata={

"experiment": "baseline_v1",

"hypothesis": "Higher temperature improves creative tasks",

"hardware": "A100-80GB",

}

),

tasks=[

FlowTask(

name="inspect_evals/gpqa_diamond",

model="openai/gpt-4o",

metadata={

"task_variant": "chemistry_subset",

"note": "Testing with reduced context",

},

)

],

)The metadata from FlowOptions is applied globally to all tasks in the evaluation run, while task-level metadata is specific to each task.

flow_metadata (Flow-Only Metadata)

The flow_metadata field is available on FlowJob, FlowTask, FlowModel, FlowSolver, and FlowAgent. This metadata is not passed to Inspect AI—it exists only in the Flow configuration and is useful for configuration-time logic and organization.

Use cases:

- Filtering or selecting configurations based on properties

- Organizing complex configuration generation logic

- Documenting configuration decisions

- Annotating configs without polluting Inspect AI logs

Example: Configuration-time filtering

flow_metadata.py

from inspect_flow import FlowJob, FlowModel, tasks_matrix

# Define models with metadata about capabilities

models = [

FlowModel(name="openai/gpt-4o", flow_metadata={"context_window": 128000}),

FlowModel(name="openai/gpt-4o-mini", flow_metadata={"context_window": 128000}),

FlowModel(

name="anthropic/claude-3-5-sonnet", flow_metadata={"context_window": 200000}

),

]

# Filter to only long-context models

long_context_models = [

m

for m in models

if m.flow_metadata and m.flow_metadata.get("context_window", 0) >= 128000

]

FlowJob(

log_dir="logs",

tasks=tasks_matrix(

task="long_context_task",

model=long_context_models,

),

)Variable Substitution

Flow Variables (--var)

Pass custom variables to Python config files using --var or the INSPECT_FLOW_VAR environment variable. Use this for dynamic configuration that isn’t available via --set. Variables are accessible via the __flow_vars__ dictionary:

flow run config.py --var task_min_priority=2flow_vars.py

from inspect_flow import FlowJob, FlowTask

task_min_priority = globals().get("__flow_vars__", {}).get("task_min_priority", 1)

all_tasks = [

FlowTask(name="task_easy", flow_metadata={"priority": 1}),

FlowTask(name="task_medium", flow_metadata={"priority": 2}),

FlowTask(name="task_hard", flow_metadata={"priority": 3}),

]

FlowJob(

log_dir="logs",

tasks=[

t

for t in all_tasks

if (t.flow_metadata or {}).get("priority", 0) >= task_min_priority

],

)Template Substitution

Use {field_name} syntax to reference other FlowJob configuration values. Substitutions are applied after the config is loaded:

FlowJob(

log_dir="logs/my_eval",

options=FlowOptions(bundle_dir="{log_dir}/bundle"),

# Result: bundle_dir="logs/my_eval/bundle"

)For nested fields, use bracket notation: {options[eval_set_id]} or {flow_metadata[key]}. Substitutions are resolved recursively until no more remain.

Bundle URL Mapping

Convert local bundle paths to public URLs for sharing evaluation results. The bundle_url_map in FlowOptions applies string replacements to bundle_dir to generate a shareable URL that’s printed to stdout after the evaluation completes.

bundle_url_map.py

from inspect_flow import FlowJob, FlowOptions, FlowTask

FlowJob(

log_dir="logs/my_eval",

options=FlowOptions(

bundle_dir="/local/storage/bundles/my_eval",

bundle_url_map={"/local/storage": "https://example.com/shared"},

),

tasks=[FlowTask(name="task", model="openai/gpt-4o")],

)After running this prints: Bundle URL: https://example.com/shared/bundles/my_eval

Use this when storing bundles on servers with public HTTP access or cloud storage with URL mapping. Multiple mappings are applied in order.

Configuration Scripts

Included configuration files can execute validation code to enforce constraints. Files named _flow.py are automatically included from parent directories, and receive __flow_including_jobs__ containing all configs in the include chain.

Terminology:

- Including config: The file being loaded (e.g.,

my_config.py) - Included config: The

_flow.pyfile(s) automatically inherited from parent directories

The included config can inspect the including configs to validate or enforce constraints.

Prevent Runs with Uncommitted Changes

Place a _flow.py file at your repository root to validate that all configs are in clean git repositories. This validation runs automatically for all configs in subdirectories.

_flow.py

import subprocess

from pathlib import Path

from inspect_flow import FlowJob

# Get all configs that are including this one

including_jobs: dict[str, FlowJob] = globals().get("__flow_including_jobs__", {})

def check_repo(path: str) -> None:

abs_path = Path(path).resolve()

check_dir = abs_path if abs_path.is_dir() else abs_path.parent

result = subprocess.run(

["git", "status", "--porcelain"],

cwd=check_dir,

capture_output=True,

text=True,

check=True,

)

if result.stdout.strip():

raise RuntimeError(f"The repository at {check_dir} has uncommitted changes.")

# Check this config and all configs including it

check_repo(__file__)

for path in including_jobs.keys():

check_repo(path)

FlowJob() # Return empty job for inheritanceconfig.py

# Automatically inherits _flow.py

from inspect_flow import FlowJob, FlowTask

FlowJob(

log_dir="logs",

dependencies=["inspect-evals"],

tasks=[FlowTask(name="inspect_evals/gpqa_diamond", model="openai/gpt-4o")],

)

# Will fail if uncommitted changes exist in the repositoryLock Configuration Fields

A _flow.py file can prevent configs from overriding critical settings:

_flow.py

from inspect_flow import FlowJob, FlowOptions

MAX_SAMPLES = 16

# Get all configs that are including this one

including_jobs: dict[str, FlowJob] = globals().get("__flow_including_jobs__", {})

# Validate that including configs don't override MAX_SAMPLES

for file, job in including_jobs.items():

if (

job.options

and job.options.max_samples is not None

and job.options.max_samples != MAX_SAMPLES

):

raise ValueError(

f"Do not override max_samples! Error in {file} (or its includes)"

)

FlowJob(

options=FlowOptions(max_samples=MAX_SAMPLES),

)config.py

# Automatically inherits _flow.py

from inspect_flow import FlowJob, FlowOptions, FlowTask

FlowJob(

log_dir="logs",

options=FlowOptions(max_samples=32), # Will raise ValueError!

tasks=[FlowTask(name="inspect_evals/gpqa_diamond", model="openai/gpt-4o")],

)This pattern is useful for enforcing organizational standards (resource limits, safety constraints, etc.) across all evaluation configs in a repository.