Flow Concepts

The primary interface for defining a workflow in Flow is a FlowSpec. A FlowSpec defines the log directory, tasks, models, and other options that make up the spec. When Inspect Flow processes the FlowSpec, it resolves the configuration into a list of evaluation tasks to be run. These tasks are provided to Inspect’s eval_set() function along with any additional configuration, and Inspect executes the tasks.

Inspect Flow mirrors Inspect AI’s object model with corresponding Flow types:

- FlowSpec — The top-level spec definition. Contains a list of tasks and spec settings. Under the hood it translates to an Inspect AI Eval Set.

- FlowTask — Configuration for a single evaluation task. Maps to Inspect AI Task parameters.

- FlowModel — Model configuration including API settings and generation settings. Maps to Inspect AI Model.

- FlowSolver and FlowAgent — Solver and agent chain configuration. Map to Inspect AI Solver and Agent.

- FlowScorer — Configuration for a Scorer. Maps to Inspect AI Scorer.

- FlowOptions — Runtime execution options. Maps to Inspect AI eval_set() parameters.

- FlowDefaults — System for setting default values across tasks, models, solvers, and agents.

- FlowDependencies — Configuration for evaluation dependencies (used in virtual environment mode).

Config Files

Flow configuration files are Python files where the last expression is either:

Since config files are standard Python code, you can use all Python features including variables, loops, comprehensions, imports, and comments. Flow configs are just Python!

This last expression requirement only applies when loading configs from files (via CLI or load_spec()). When using the Python API directly with run(), you pass the FlowSpec object programmatically—no file or last expression needed.

Basic Example

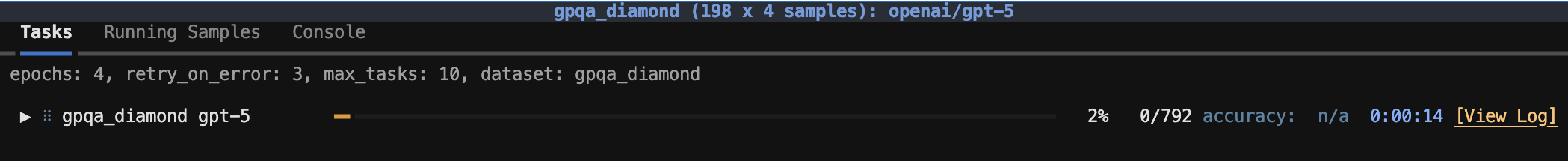

In Welcome - Basic Example, we showed a simple FlowSpec. Let’s break down what’s happening:

config.py

from inspect_flow import FlowSpec, FlowTask

FlowSpec(

log_dir="logs",

tasks=[

FlowTask(

name="inspect_evals/gpqa_diamond",

model="openai/gpt-5",

),

],

)- 1

- Specify the directory for storing logs

- 2

- List evaluation tasks to run

- 3

- Specify task from registry by name

- 4

- Specify model to evaluate by name

To run the task, first ensure you have the necessary dependencies installed (inspect-evals and openai for this example), then run the following command in your shell.

flow run config.pyAlternatively, use the --venv flag to automatically install dependencies in an isolated virtual environment:

flow run config.py --venv

What happens when you run this (default in-process mode)?

- Flow loads the

gpqa_diamondtask from theinspect_evalsregistry - Runs the evaluation with GPT-5 in the current Python process

- Stores results in

logs/

When using --venv, Flow additionally creates an isolated virtual environment, installs dependencies automatically, executes the evaluation in that environment, and cleans up the temporary environment after completion.

Jobs

FlowSpec is the primary interface for defining Flow workflows. All Flow operations—including parameter sweeps and matrix expansions—ultimately produce a list of tasks that FlowSpec executes.

Required fields:

| Field | Description |

|---|---|

log_dir |

Output path for logging results. Supports S3 paths (e.g., s3://bucket/path) |

Optional fields:

| Field | Description | Default |

|---|---|---|

tasks |

List of tasks to run (FlowTask objects, Inspect AI Task objects, or strings) |

None |

includes |

List of other flow configs to include (paths as strings). Relative paths resolved relative to config file (CLI) or base_dir arg (API). Additionally, any _flow.py files in the same directory or parent directories are automatically included |

None |

log_dir_create_unique |

If True, append numeric suffix to log_dir if it exists. If False, use existing directory (must only contain logs from tasks in the spec or have options.log_dir_allow_dirty=True) |

False |

execution_type |

Execution environment: "inproc" runs tasks in the current Python process, "venv" runs tasks in an isolated virtual environment |

"inproc" |

python_version |

Python version for the isolated virtual environment when execution_type="venv" (e.g., "3.11") |

Same as current environment |

dependencies |

FlowDependencies object configuring package installation in venv mode. Controls dependency file detection, additional packages, and auto-detection behavior. Ignored in inproc mode. | Auto-detect from pyproject.toml or requirements.txt, and config object names (venv mode only) |

env |

Environment variables to set when running tasks | None |

options |

Runtime options passed to eval_set (see FlowOptions reference) |

None |

defaults |

Default values applied across tasks, models, solvers, and agents (see Defaults and FlowDefaults reference) | None |

flow_metadata |

Metadata stored in the flow config (not passed to Inspect AI, see flow_metadata) | None |

Tasks

FlowTask defines the configuration for a single evaluation. Each FlowTask maps to an Inspect AI Task and accepts the same parameters—including the model to evaluate, solver chain, generation config, sandbox environment, and dataset filters.

Specification

Flow supports multiple ways to specify tasks, from simple registry names to programmatic Task objects. Choose the approach that best fits your needs:

- Registry names and file paths: Simple string-based references for tasks from packages or local files

- Task factory functions: Use

FlowTask(factory=...)when you have a Task object but want to override parameters like model or config - Direct Task objects: Pass Task objects directly to

FlowSpec.taskswhen you don’t need Flow-level parameter overrides

Registry Name

# Tasks from installed packages

FlowTask(

name="inspect_evals/gpqa_diamond",

model="openai/gpt-5"

)File Path

# Auto-discovers `@task` decorated functions in the specified file and creates a task for each of them

FlowTask(

name="./my_task.py",

model="openai/gpt-5"

)File with Function

# Explicitly selects a specific function from the file

FlowTask(

name="./my_task.py@custom_eval",

model="openai/gpt-5"

)Task Factory Function

# Use a factory function that returns an Inspect AI Task

from inspect_ai import Task, task

from inspect_ai.dataset import example_dataset

from inspect_ai.solver import generate

from inspect_flow import FlowTask

@task # @task decorator makes the function available in the registry and ensures that tasks with different args are considered unique

def my_task_factory():

return Task(dataset=example_dataset("security_guide"), solver=generate())

FlowTask(

factory=my_task_factory,

model="openai/gpt-5", # Override the model

)Direct Task Object in FlowSpec

direct_task_object.py

# Pass Task objects directly to FlowSpec.tasks

# Use this when you don't need to override task parameters

from inspect_ai import Task, task

from inspect_ai.dataset import example_dataset

from inspect_ai.solver import generate

from inspect_flow import FlowSpec

@task # Optional: use @task decorator to make function available in the registry

def my_custom_task():

return Task(

dataset=example_dataset("security_guide"),

solver=generate(),

)

FlowSpec(

log_dir="logs",

tasks=[

my_custom_task(),

"inspect_evals/gpqa_diamond",

],

)Configuration

FlowTask accepts parameters that map to Inspect AI Task fields. The examples below show commonly used fields; see the FlowTask reference documentation for the complete list of available parameters.

FlowTask(

name="inspect_evals/mmlu_0_shot",

model="openai/gpt-5",

epochs=3,

config=GenerateConfig(

temperature=0.7,

max_tokens=1000,

),

solver="chain_of_thought",

args={"subject": "physics"},

sandbox="docker",

sample_id=[0, 1, 2],

extra_args=FlowExtraArgs(

solver={"max_attempts": 3}

),

)- 1

-

Task name or factory — Use

nameto specify a task by registry name ("inspect_evals/mmlu"), file path ("./task.py"), or file with function ("./task.py@eval_fn"). Alternatively, usefactoryto provide a callable that returns an Inspect AITaskobject (see Task Factory Function example above). When usingfactory, you can still override task parameters likemodel,solver, orconfigvia other FlowTask fields. - 2

-

Model — Maps to Inspect AI

Task.model. Optional model for this task. If not specified, uses the model fromINSPECT_EVAL_MODELenvironment variable. Can be a string ("openai/gpt-5"), a FlowModel object for advanced configuration, or an Inspect AIModelobject. - 3

-

Epochs — Maps to Inspect AI

Task.epochs. Number of times to repeat evaluation over the dataset samples. Can be an integer (epochs=3) or a FlowEpochs object to specify custom reducer functions (FlowEpochs(epochs=3, reducer="median")). By default, scores are combined using the"mean"reducer across epochs. - 4

-

Generation config — Maps to Inspect AI

Task.config(GenerateConfig). Model generation parameters liketemperature,max_tokens,top_p,reasoning_effort, etc. These settings override config onFlowSpec.configbut are overridden by settings onFlowModel.config. - 5

-

Solver chain — Maps to Inspect AI

Task.solver. The algorithm(s) for solving the task. Can be a string ("chain_of_thought"), FlowSolver object, FlowAgent object, Inspect AISolverorAgentobject, or a list of solvers for chaining. Defaults togenerate()if not specified. - 6

-

Task arguments — Maps to task function parameters. Dictionary of arguments passed to the task constructor or

@taskdecorated function. Enables parameterization of tasks (e.g., selecting dataset subsets, configuring difficulty levels). - 7

-

Sandbox environment — Maps to Inspect AI

Task.sandbox. Can be a string ("docker","local"), a tuple with additional config, or aSandboxEnvironmentTypeobject. - 8

-

Sample selection — Evaluate specific samples from the dataset. Accepts a single ID (

sample_id=0), list of IDs (sample_id=[0, 1, 2]), or list of string IDs. - 9

-

Extra arguments — Additional arguments to pass when creating Inspect AI objects (models, solvers, agents, scorers) for this specific task. Useful for per-task customization, such as providing different tools to an agent depending on the task. Values in

extra_argsoverride any args specified in the object’s ownargsfield.

Models

When specifying a task, you can provide the model for the task as a string with a model name, a FlowModel, or an Inspect AI Model object. When using the simple string, the default values for that model will be used. For example:

FlowTask(name="task", model="openai/gpt-5")Using FlowModel allows you to specify additional parameters for the model (e.g. GenerateConfig). Use FlowModel when you need to set custom GenerateConfig, API endpoints, custom API keys for a specific model, or perform other custom configuration. For example:

FlowTask(

name="task",

model=FlowModel(

name="openai/gpt-5",

config=GenerateConfig(

reasoning_effort="medium",

max_connections=10,

),

base_url="https://custom-endpoint.com",

api_key="${CUSTOM_API_KEY}",

)

)You can also pass an Inspect AI Model object directly when you need programmatic model configuration:

from inspect_ai.model import get_model

# Create a Model instance with custom configuration

custom_model = get_model(

"openai/gpt-5",

config=GenerateConfig(reasoning_effort="high"),

base_url="https://custom-endpoint.com"

)

FlowTask(

name="task",

model=custom_model

)Model Roles

For agent evaluations with multiple model roles, use model_roles to specify which model to use for each role. For example:

# For agent evaluations with multiple roles

FlowTask(

name="multi_agent_task",

model_roles={

"assistant": "openai/gpt-5",

"critic": "anthropic/claude-3-5-sonnet",

}

)